How We Used Redux on Backend and Got Offline-First Mobile App as a Result

Preface

Today we want to share our experience of building offline-first React-Native application, using Redux-like approach on our NodeJS backend server.

Indeed we just kind of leveraged so called event-sourcing pattern in our system without any specific tools for this purpose (e.g. Samza or Kafka). Altough you may prefer to use this tools in your project, what I’m trying to show here is that by actually understating the core principals that stand behind event-sourcing you can gain benefits of this approach even without a need to change the whole architecture of your system or incorporate some specific rocker-science technologies and tools.

Let’s get started.

Problem description

So what type of the application are we talking about. Indeed it’s quite a massive Learning Management System that consists of 3 main parts:

- Web-client (React + Redux)

- iOS-client and Android-client (React-Native + Redux)

- Backend (Node JS + MongoDB + Redux?)

Also I should mention the following general requirements for the system:

- Offline mode for mobile, so the user can get data while he’s offline as well as complete any actions and we should reflect the result of these actions in the app

- Detailed analytics in mobile and web, that may be the same and may not be the same, so for example on web we can show an aggregated statistics of a group of users and on mobile just individual person’s results

- Requirments for analytics is constantly evolving, which practically means that any time your boss can come to your table and say something like “Hey I’m glad that you’ve been working so hard on this charts for the last month but we just decided that we need some completly differrent metrics and statistics”

- Audit trail support, because the project is really closely tight with a legal field of activity

But at first let’s take a step back and ponder on how we used to process the data, because this problem is really crucial for our future solution.

How we think about data

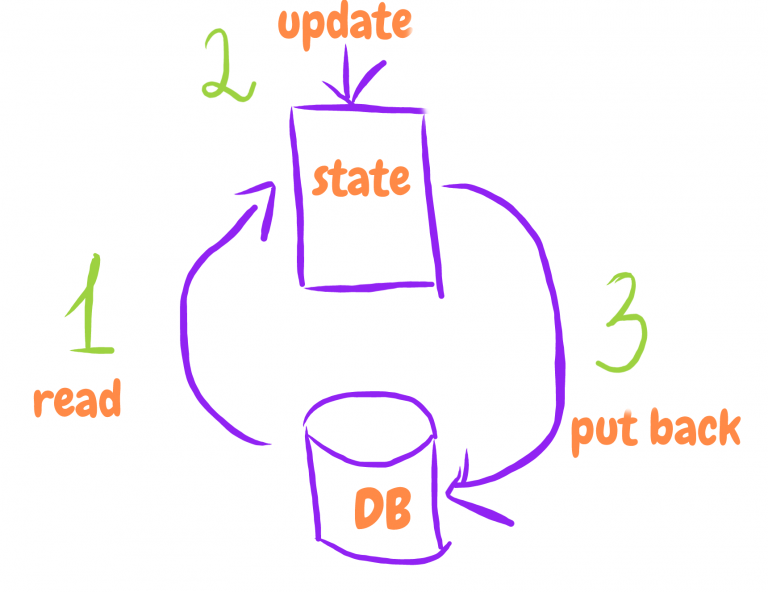

The actual result of any operation in the OS is a memory transformation. In an application memory is represented by a state in general. It may be a complicated DB, a raw file or just a plain javascript object. Similaly to an OS the result of any action in our application is a state transformation. And it’s not really that important how we represent our state but how me change it.

The common approach for this task is to maintain only the curently relevant state and mutate it once user performes actions. Take a standard create, read, update, delete (CRUD) model. The typical flow in this model is to fetch data, modify it somehow and put updated data back to the storage. Also you need to control concurrent updates, becase they can really mess everything up if the state of your whole application is represented by currently accumulated piece of data.

As software engineering began to rapidly evolving developers realized that this traditional paradigm of thinking about data is not very effective in some type of applications (say Enterprise). As a result new architectural patterns started to emerge.

I really encourage you to get to know yourself with DDD, CQRS and Event Sourcing patterns (as they are quite related and often mentioned side by side), to get a more wide view on data processing, but in this article I won’t dig deep into any of them. The main point of our interest here is Event sourcing and how it changes the way we think about data.

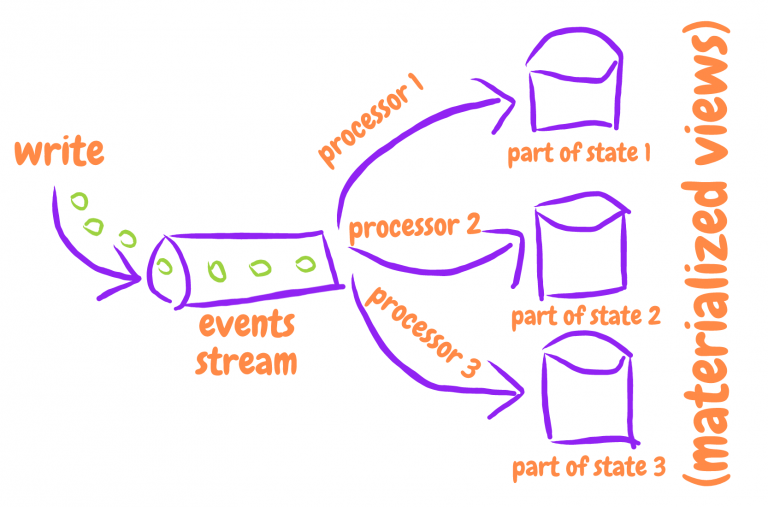

What if instead of keeping one aggregated state of the date we would record all of the actions taken on that data and postprocess them later. It’s like we don’t know at the time of how exatly this action should influence the state but we totally can’t just ignore it. So we keep appending these actions to our log and use them later when we would figure out how to deal with that.

Wait but why?

At first you make think that this approach is a very weird thing, like why should we keep all this events and process them later if the only result we want to get is to change the state. Isn’t it adds more complexity to our system and an extra work to perform?

Yes it is, but it also gives a lot of unvaluable benefits which we will be deprived otherwise. Ideed it’s quite a common solution and to ensure yourself just take a look to a real life.

When you come to visit a doctor and bring you medical file with you (or it may be in electronical variant), does your doctor just crosses out all the information in your file and writes just your current pysical condition? Or is he just keeps appending your health status to your file, so overtime you have a complete picture of how the things went and a full history of all the problems you’ve encountered? That’s an event sourcing model.

Or take jurisprudence. Let’s say you’ve signed a contract and in some time you’ve decided to review the conditions and make some changes. Do you just tear your old contract and create a brand new from scratch or just make the addendum to the previous contract? So as years go by you can always check the initial contract and all the addendums that follow to correctly identify what the contract eventually says. That’s an event sourcing as well.

By the way, I can’t really undestand how we became so confident that we don’t need to store the data. Indeed we can’t even imagine how valuable a data may be in some situation so why do we neglect it so easily? Wouldn’t it be much more rational to keep it?

Read also: 5 Steps to Train Word2vec Model with NodeJS

When not to use it

I will show you all of the benefits we gained from this approach in our application later, but you definetly should keep in mind that it’s not suitable for all cases. If your system has a little or no business logic which is not in danger of a concurrent updates and works naturally good with CRUD or have real-time updates between data and views then you probably good to go without event sourcing at all. But in other cases you can gain a lot of advantages if you incorporate at least some of the patterns that will be discussed later.

And what about Redux

As we all know, a few years ago Facebook suggested a new approach to state managing on frontend called Flux which hardly relies on unidirectional data flow and is completly differentiates from how things previousy worked (e.g. two-way data binding in Angular). And Redux enhanced these approach with some new concepts and took an honorable place in modern frontend developer’s tools set.

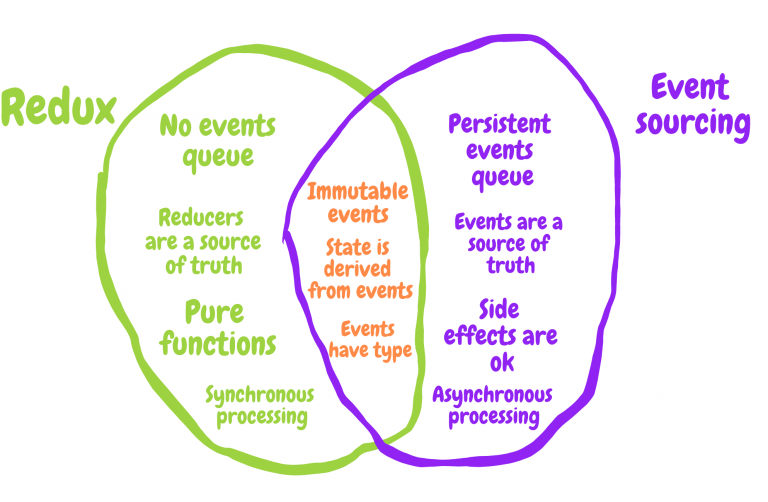

In order to change the state you need to dispatch an action and an action is indeed an event description, or an event itself. And as a reaction to this event we change our state accordingly. So it’s pretty much similar to event-sourcing pattern, so how Redux really relates to event-sourcing?

First of all we should mention that Redux and event-sourcing serve the same purpose – managing transactional state. The main difference is that Redux goes a lot more futher with presciptions on how to actually do it.

Redux explicitly demonstrates how reducers over actions (events) can be the single source of truth for the application state. Event sourcing on the other hand never prescribed how events should be processed, only their creation and recording.

Taking a look at a typical event sourcing implementations you can encounter a lot of object-oriented and imperative code with side effects all over the way.

Redux on the other hand favours pure functions (without any side effects by definition) which tends more to functional programming paradigm.

As you do event sourcing you often emphasize that your events is the source of truth and you hardly rely on them. But on Redux example we can see that it’s more like reducers are the source of truth. Take the same actions but different reducers and you’ll get a completely different state as a result.

Almost any event sourcing solution relies hardly on events queue and the ability to process events asyncronously as a result. But Redux prefers to handle all actions syncronosly and omits creating any queues.

You can bring on event some more differences but now let’s concentrate on two very important concepts which are similar for event sourcing and Redux.

- The state is derived from events

- Events are immutable. This is very important if we want to have a realy predictable and stable state, which we can traverse in time back and forward. But not only that, events immutability offers us some more interesting perks but we’ll get back to it later

Let the fight begin

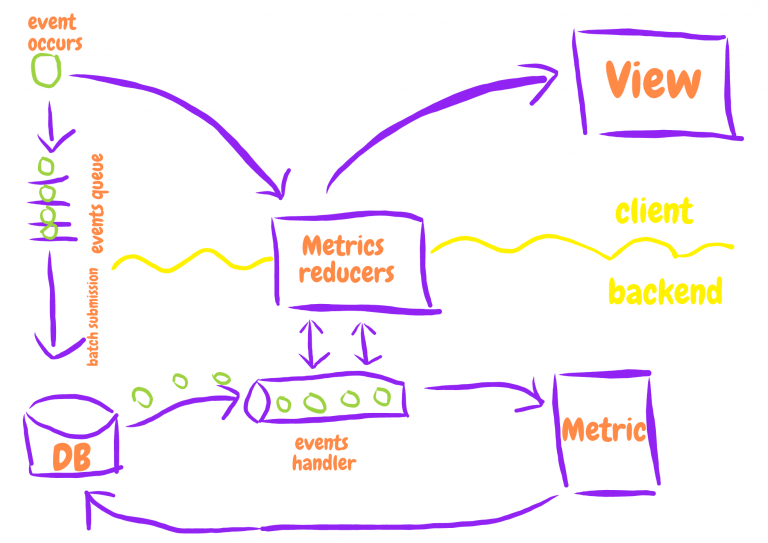

So let’s get ourselves closer to the practice. Here is an overview of what situation we have in our application.

The user is offline. He passes test. We should:

- React to his actions on mobile and reaggreagate analytics based on his passing result

- Somehow gain the same reaggreagated analytics in our DB on a backend, so we can show it in a completely separate Web part of the application

The first part of the task is not so much of a problem indeed. Optimistic updates to the rescue. We can fire actions and recalculate analytics in mobile app even in offline mode, because obviously internet connection is not a requirement for Redux to work.

But how to deal with the second problem? Let’s just save our actions somewhere for now, so we don’t miss what our user did when we was offline.

We should do this to every event we are interested in outer processing. So I suggest to create a separate action creator file (however this depends on your preferable react project organization) where we describe every action creator for interesting us event like this:

/* We rely on thunk-middleware to deal with asyncronous actions.

If you are not familiar with the concept, go check it out,

it may help you to undestand our action creator's structure better */

export function createQuestionAnswerEvent(payload) {

return (dispatch) => {

const formattedEvent = {

type: ActionTypes.ANSWER_QUESTION,

// It's crucial to have timestamp on every event

timestamp: new Date().toISOString(),

payload

};

// Dispatch the event itself, so our regular reducers that are responsible for analytics can process it and recalculate the statistics

dispatch(formattedEvent);

// Dispatch the action that declares that we want to save this particular event

dispatch({ type: ActionTypes.SAVE_EVENT, payload: formattedEvent });

// At some point in time we gonna send all saved events to the backend, but let's get to it later

dispatch(postEventsIfLimitReached());

};

}And here is the corresponding reducer, which takes every action with type SAVE_EVENT and pushes it the branch of our state where we collect all events and which represented by a row array

const initialState = [];

export default (state = initialState, action) => {

switch (action.type) {

// Append event to our events log

case ActionTypes.SAVE_EVENT:

return state.concat(action.payload);

// After sending events to the backend we can clear them

case ActionTypes.POST_EVENTS_SUCCESS:

return [];

/* If user wants to clear the client cache and make sure

that all analytics is backend based, he can press a button,

which will fire CLEAR_STORAGE action */

case ActionTypes.CLEAR_STORAGE:

return [];

default:

return state;

}

};Now we have saved all of the actions which user performed while he was offline. The next logical step would be to send these events to the backend, and that’s exatly what postEventsIfLimitReached function you’ve seen before does.

It’s really crucial to make sure that the user is online, before attempting to post events. Also it’s preferable to send events not one-by-one but in packs, cause if your events logging is intense and user produces several actions in second, you don’t really want to fire HTTP requests so often.

export function postEventsIfLimitReached() {

return async (dispatch, getState) => {

const events = getState().events;

/* If user is online perform batch events submission */

if (events.length > config.EVENTS_LIMIT && getState().connection.isConnected) {

try {

await api.events.post(events);

dispatch({ type: ActionTypes.POST_EVENTS_SUCCESS });

} catch (e) {

dispatch({ type: ActionTypes.POST_EVENTS_FAIL });

}

}

};

}Seems nice. We got optimistic updates on our mobile app and we managed to deliver every action of a user to the backend.

Switching to the back

Let’s switch to the backend for now. We have pretty much standard POST route in our NodeJs app, which receives a pack of events and saves them into MongoDB.

Take a quick look at the event scheme:

const EventSchema = new Schema({

employeeId : { type: 'ObjectId', required: true },

type : {

type : String,

required : true,

enum : [

'TRAINING_HEARTBEAT',

'ANSWER_QUESTION',

'START_DISCUSSION',

'MARK_TOPIC_AS_READ',

'MARK_COMMENT_AS_READ',

'LEAVE_COMMENT',

'START_READING_MODE',

'FINISH_READING_MODE',

'START_QUIZ_MODE',

'FINISH_QUIZ_MODE',

'OPEN_TRAINING',

'CLOSE_APP'

]

},

timestamp : { type: Date, required: true },

isProcessed : { type: Boolean, required: true, default: false },

payload : { type: Object, default: {} }

});I’ll omit the description of the route, because there is really nothing special in that. Let’s move to more interesting things.

So now, when we have all our events in our DB, we should process them somehow.

We decided to create a separate class for this purpose, which is imported in the start file of our application like this:

import EventsHandler from './lib/EventsHandler';

const eventsHandler = new EventsHandler();

eventsHandler.start();Now let’s take a look at the class itself.

export default class EventsHandler {

// Initialize an interval

start() {

this.planNewRound();

}

stop() {

clearTimeout(this.timeout);

}

// Fire processing of new events every once in a while

planNewRound() {

this.timeout = setTimeout(async () => {

await this.main();

this.planNewRound();

}, config.eventsProcessingInterval);

}

async main() {

const events = await this.fetchEvents();

await this.processEvents(events);

}

async processEvents(events) {

const metrics = await this.fetchMetricsForEvents(events);

/* Here we should process events somehow.

But HOW???

We'll get back to it later

*/

/* It's critical to mark events as read after processing,

so we don't fetch and apply the same events every time */

await Promise.all(events.map(this.markEventAsProcessed));

}

async markEventAsProcessed(event) {

event.set({ isProcessed: true });

return event.save();

}

async fetchMetricsForEvents(events) {

/* I removed a lot of domain-related code from this method, for the sake

of simplicity. What we are doing here is accumulating ids of Metrics related to every event from argument events.

That's how we got metricsIds */

return Metric.find({ _id: { $in: metricsIds } });

}

async fetchEvents() {

return Event.find({ isProcessed: false }).limit(config.eventsFetchingLimit);

}

}This is a very simplified version of our class but which perfectly reflects the main idea, so you just can take a grasp of it. I removed a lot of methods, checks and domain-related logic, so you can concentrate purely on events processing.

Read Also: Configuration management for Node.js apps

So what we are doing with this class is:

- fetching an unprocessed events from a DB

- fething all related to events data, that is necessary for processing. In our case it’s mostly Metrics documents, that present an individual (Session Metric) and group (Training Metric) statistics

- process events somehow and change the metrics

- save changed data

- mark events as processed

It’s not a perfect solution for a several reasons, but it completely mathes or needs. In case you want a more bulletproof solution, consider this points:

- This solution is periodic, which means that in some cases you can pass some events and don’t process them

- We use MongoDB for events storing, but you may prefer more appropriate tool for this (e.g. Kafka)

- Our events gain standard Mongo’s ObjectId, but it may be more convinient to use an incremental identifier for each event alognside or instead of primary id. That way you would have a more solid grasp of whats going on and what is the correct order of your events

- Prioritizing is missing, but in some cases you would preffer to process some events before others

Troubles with processing

Ok, let’s go on. Events handling structure seems to be ready. But we still have the most intricate qustion to resolve: how we should process the events?

The huge problem that’s arising is the difference between our optimistic updates on client and the results we got here. And it’s a very nasty bug from a UX point of view – e.g. a user passes a test in offline and sees that he already completed 81% of the test but once he goes online he suddenly discovers that his actual progress is 78% somehow, and he got a lot less time to deal with the test than he was informed during an offline mode.

So it’s quite a massive problem to keep analytic in sync both on client and on server. And if you finally managed to achive it somehow, than suddenly your QA discovers a bug in a calculations and you should fix it on a client and on a backend separatelly and make sure that the fix produces equal results on both platforms. Or what is even more common – business requirements changed and now you should rewrite processing on both platforms and chances are – you won’t get a mathing results. Again.

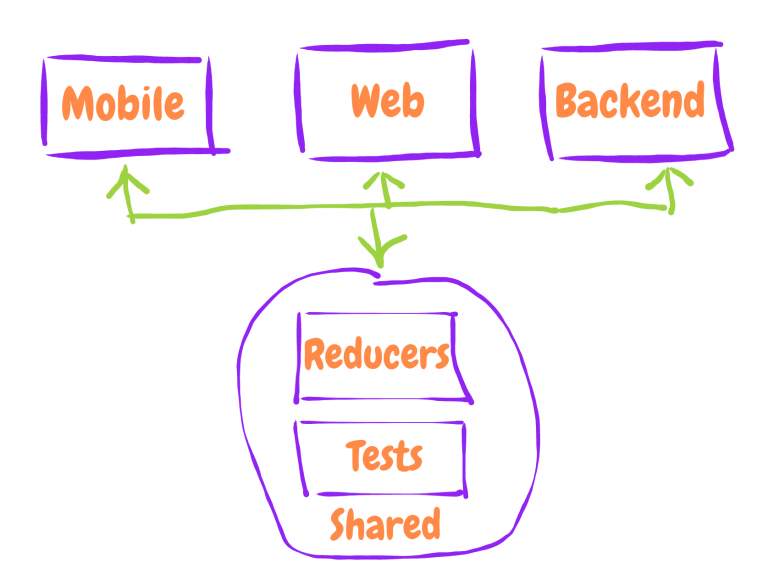

Code reuse to the rescue

This is really frustrating. Why we should do double-work and struggle from inconsistencies? What if we could reuse the processing code. This idea may sound crazy at first, cause on client side for state managing we use what Redux has to offer and nothing similar on backend. But let’s take a more close look on how we do it with Redux.

Reducers – are just pure functions that get an action and initial state as parameters and returns a whole new state. The main mystified part here, that really simplifies day-to-day life of developers is that Redux calls this reducer function itself, without our direct statement to do it. So we just dispatch an action somewhere and we are sure that Redux will call all declared reducers and provide them with this action and appropriate initial state and will save the new state brach to the corresponding place in state.

But what if we would reuse reducer function but will do all of the hard work around them on backend themselves, without a Redux?

Excited? I bet you are, but here are a few things we should keep in mind to not screw everything up:

- Reducers must be pure functions with business logic without any side effects. All side effets should be kept separately (e.g. DB interactions, we have a lot of them in our EventsHandler class itself, but the are forbidden in reducers)

- Never mutate Metrics and events

Well, here is the full version of a processEvents funcion you’ve saw before:

async processEvents(events) {

const metrics = await this.fetchMetricsForEvents(events);

await Promise.all(metrics.map(async (metric) => {

/* sessionMetricReducer and trainingMetricReducer are just functions that are imported from a separate repository,

and are reused on the client */

const reducer = metric.type === 'SESSION_METRIC' ? sessionMetricReducer : trainingMetricReducer;

try {

/* Beatiful, huh? 🙂

Just a line which reduces hundreds or thousands of events

to a one aggregate Metric (say analytical report)

*/

const newPayload = events.reduce(reducer, metric.payload);

/* If an event should not reflect the metric in any ways

we just return an initial state in our reducers, so we can

have this convenient comparison by link here */

if (newPayload !== metric.payload) {

metric.set({ payload: newPayload });

return metric.save();

}

} catch (e) {

console.error('Error during events reducing', e);

}

return Promise.resolve();

}));

await Promise.all(events.map(this.markEventAsProcessed));

}Here is a quick explanation of whats going on.

sessionMetricReducer is a function for calculation of individual user’s statistics and trainingMetricReducer is a function for calculation of a group statistics. They both are pure as hell. We keep them in a separate repository, covered with unit tests from head to toe, and import them on a client as well. That is called code reuse 🙂 And we’ll get back to them later.

I bet you all know how reduce function works in JS, but here is a quick overview of whats going on here const newPayload = events.reduce(reducer, metric.payload).

We have an array of events, that’s an analogue of Redux’s actions. Thay have a similar strucure and serve the same purpose (i’ve already showed the structure of event during it’s creation on client in createQuestionAnswerEvent function).

metric.payload is our initial state, and all you have to knowl about it is that it’s a plain javascript object.

So the reduce function takes our initial state and passes it with a first event to our reducer, which is just a pure function, which calculates new state and returns it. Then reduce takes this new state and the next event and passes them to the reducer again. It does this till every event won’t be applied. At the end we got a completely new Metric payload, which was influenced by every single event! Excellent.

A more bulletproof notation

Although notation events.reduce(reducer, metric.payload) is very consize and simple, we may have a pitfall here if one of events would be invalid. In that case we will catch an exception for the whole pack (not only for this inavalid event) and won’t be able to save the result of others valid events.

If this possibility exists for your type of events it is more preferrable to apply them one by one and save Metric after each applied event, like this:

for (const event of events) {

try {

// Pay attention to the params order.

// This way we should first pass the state and after that the event itself

const newPayload = reducer(metric.payload, event);

if (newPayload !== metric.payload) {

metric.set({ payload: newPayload });

await metric.save();

}

} catch (e) {

console.error('Error during events reducing', e);

}

}Here is a reducer

As you may guess, the main chalange here is to keep Metric.payload on backend and state’s branch which represents users’ statistics on client in a similar structure. That’s the only way if you want to incorporate code reuse. By the way, events are already the same, cause if you can remember we create them on a frontend and dispatch through client reducers at first and after that we send them to the server. As long as these two conditions are met we can freely reuse the reducers.

Here is a simplified version of sessionMetricReducer, so you can make sure that it is a just a plain function, nothing scary

import moment from 'moment';

/* All are pure function, that responsible for a separate parts

of Metric indicators (say separate branches of state) */

import { spentTimeReducer, calculateTimeToFinish } from './shared';

import {

questionsPassingRatesReducer,

calculateAdoptionLevel,

adoptionBurndownReducer,

visitsReducer

} from './session';

const initialState = {

timestampOfLastAppliedEvent : '',

requiredTimeToFinish : 0,

adoptionLevel : 0,

adoptionBurndown : [],

visits : [],

spentTime : [],

questionsPassingRates : []

};

export default function sessionMetricReducer(state = initialState, event) {

const currentEventDate = moment(event.timestamp);

const lastAppliedEventDate = moment(state.timestampOfLastAppliedEvent);

// Pay attention here, we'll discuss this line below

if (currentEventDate.isBefore(lastAppliedEventDate)) return state;

switch (event.type) {

case 'ANSWER_QUESTION': {

const questionsPassingRates = questionsPassingRatesReducer(

state.questionsPassingRates,

event

);

const adoptionLevel = calculateAdoptionLevel(questionsPassingRates);

const adoptionBurndown = adoptionBurndownReducer(state.adoptionBurndown, event, adoptionLevel);

const requiredTimeToFinish = calculateTimeToFinish(state.spentTime, adoptionLevel);

return {

...state,

adoptionLevel,

adoptionBurndown,

requiredTimeToFinish,

questionsPassingRates,

timestampOfLastAppliedEvent : event.timestamp

};

}

case 'TRAINING_HEARTBEAT': {

const spentTime = spentTimeReducer(state.spentTime, event);

return {

...state,

spentTime,

timestampOfLastAppliedEvent : event.timestamp

};

}

case 'OPEN_TRAINING': {

const visits = visitsReducer(state.visits, event);

return {

...state,

visits,

timestampOfLastAppliedEvent : event.timestamp

};

}

default: {

return state;

}

}

}Take a look at the check in the beggining of the function. We want make sure that we don’t apply an event to the metric if next events have been applied already. This check helps to igone invalid events, if one happens.

That’s a good example of principal to always keep your events immutable. If you want to store some additional information you shouldn’t add it to event, better keep it in state somewhere. That way you can rely on events as a sort of source of truth in any point of time. Also you can establish a parallel processing of events on a several machines to reach a better performance.

Final overview

So here is the final project structure.

As I mentioned before, we keep our shared reducers in a separate repository and import them to the client and to the server. So here are the real benefits that we achived by reusing the code and storing all of the events:

- Offline work in mobile

- Analytics on mobile, web and backend are always in sync

- Reducers are easy to maintain and extend. You have to fix a bug only in one place. You have to add tests only in one place

- If we find a bug in our calculations we can fix it and recalculate all of the statistics from the beginning of times

- We can easily travel in time in statistic, move several years back and forward

- Whenever business requrements for analytics change we can easily create and calculate new metrics

- Events handling process can be ran in the background, independend of user’s main flow of work with app, which can vastly improve performance and responsiveness of the application

- Availability of all of the events greatly helps to test and dubug the system, cause you can easily replay the events at any time and observe how the system reacts

- We gained more control over analytics calculation. E.g. on mobile we calculate only individual statistics, but on the server alognside with single user statistcs we calculate diverse groups’ statistics and much more important stuff. We don’t bound to real-time user intereaction on client to do this. We can do whatever we want and how we want with this stored events

- Persistend list of events is an endless source to analize users behaviour in our system and to identify main trends and issues that users struggle with every day. We can retrieve a lot of usefull business information from this logs

- Complete history and audit trail out of the box

By the way, the described approach favours streams in general. If you are not familiar with the concept, in short streams is how we think about data flow in our application. Instead of traditional response-request model we work with an endless queue of events to which we should react somehow.

And this approach can really make some future issues easier to tackle. E.g. what if we want to update analytics in a web app a in real-time, all we have to do is to subscribe to data changes on the client (the connecion may be established via websockets for example) and whenever data chages on backend we should send a message of a specific type to notify the client.

This a raw description of a common approach to working with streams of data, which can help us to solve the problem quite effectively.

Conclusion

As you may see we obviosly didn’t implement the most canonical example of how to do event sourcing. We also didn’t fully incorporate Redux with all it ecosystem in our NodeJS application. But we didn’t really need any of that. The main purpose was to create a stable offline-first mobile application with a fluid and extending business logic and shared alanytical reports. And that’s what we managed to do in quite unusual and effective way.

So I hope it was an interesing and usefull reading for you. If you have any questions or observations feel free to left the comment bellow.

At last here is a quick overview of some quite nasty problems that you may encounter build an offline-first application and the way we dealt with them.

Comon issues with offline and how we deal with them

Issue 1. I’ve fixed a bug in calculations but the client is still using the old cached version of analytics. How to force it to use recalculated metrics from backend?

We incorporated a version propery for each metric (kind of E-TAG for those who familiar with common cache invalidation stratagies). When client fetches a metric from a server we compare client’s version with server’s version and figure out which metric is more relevant. Wins a one with a higher version. So after bug fix all we have to do is to increase version number and the client will know that his metrics is outdated

Issue 2. I need to create entities in offline and use their ids for futher corresponding. How to deal with that?

We adopted a simple but not perfect solution of manual creation of id on the client with uuid and make sure that we save the entity in DB with this id. But keep in mind that it’s always better to control that kind of data on backend, in case you would change the DB or say migrate from uuidV4 to uuidV5. As an option you can use temporary ids on the client and substitute them with real ids after their creation on BE

Issue 3. What should I use for data persistance in RN?

We don’t use any external solutions for this purpose, because we needed to provide data security by encrypting it, and it seems like it’s far more easier to implement this by yourself. But we use redux-async-initial-state for asyncronous loading of initial app state.

Here is how we create Redux store:

import { createStore, applyMiddleware, combineReducers, compose } from 'redux';

import thunkMiddleware from 'redux-thunk';

import * as asyncInitialState from 'redux-async-initial-state';

import { setAutoPersistingOfState, getPersistedState } from '../utils/offlineWorkUtils';

import rootReducer from '../reducers';

const reducer = asyncInitialState.outerReducer(combineReducers({

...rootReducer,

asyncInitialState: asyncInitialState.innerReducer

}));

const store = createStore(

reducer,

compose(applyMiddleware(thunkMiddleware, asyncInitialState.middleware(getPersistedState)))

);

setTimeout(() => {

setAutoPersistingOfState(store);

}, config.stateRehydrationTime);

export default store;And here are main utils from offlineWorkUtils:

export function getPersistedState() {

return new Promise(async (resolve) => {

// A wrapping function around AsyncStorage.getItem()

const encodedState = await getFromAsyncStorage(config.persistedStateKey);

if (!encodedState) resolve(undefined);

// A wrapping function around CryptoJS.AES.decrypt()

const decodedState = decryptAesData(encodedState);

resolve(decodedState);

});

}

export async function setAutoPersistingOfState(store) {

setInterval(async () => {

const state = store.getState();

if (!state || !Object.keys(state).length) return;

try {

// A wrapping function around CryptoJS.AES.encrypt()

const encryptedStateInfo = encryptDataWithAes(state).toString();

// A wrapping function around AsyncStorage.setItem()

await saveToAsyncStorage(config.persistedStateKey, encryptedStateInfo);

} catch (e) {

console.error('Error during state encryption', e);

}

}, config.statePersistingDebounceTime);

}For large blobs (images, audio etc) we use react-native-fs.

Rate this article !

109 ratingsAvg 3 / 5

Avg 4.5 / 5

Avg 2.8 / 5

Avg 4.5 / 5

Avg 4.3 / 5

Avg 4.3 / 5

Avg 4.6 / 5